Hi @staff , I was looking for a human model to test my detector_faces node. However there is any one available in the source from robot ignite academy, as you can see below:

r:/home/simulations/public_sim_ws/src/all/models_spawn_library/models_spawn_library_pkg/models$ ls

OliveTree1 Watermelon banana bowl condiment cup hammer saucepan toaster

RubberDucky aibo_ball beer coffee_table construction_cone dumpster monkey_wrench scissors two_pictures

Target aibo_bone book coke_can cricket_ball floor_lamp newspaper tennis_ball wood_cube_2_5cm

So to finalize the Jibo project I need one human being. Could you please provide one Github where I can clone it and use? I did not find on internet…and I also know that when I use Gazebo in my notebook, the human being can be found in Gazebo library, in “Insert” tool…but here in your virtual area I cannot manage Gazebo tools…why?

Thanks in advance

Well, I took 2 models from Gazebo Repository https://bitbucket.org/osrf/gazebo_models/downloads/

1 - Person_standing

2 - Person_walking

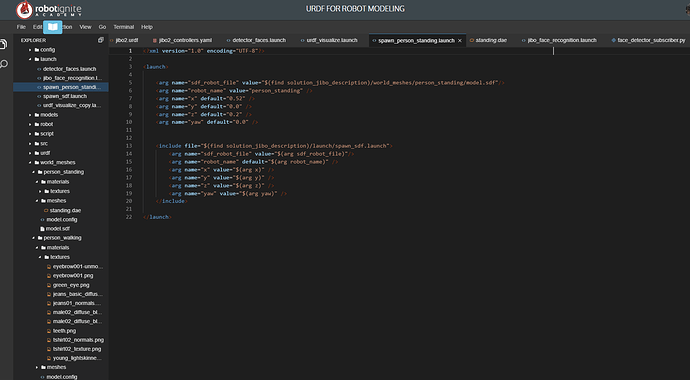

I uploaded all these models for my “solution_jibo_package”, however I could not spawn they on Gazebo. I used the same structure to spawn them on Gazebo as the one used to spawn the banana in class 3 of URDF. Using Launch file…Unfortunately it did not work…my launch file is below:

How can I spawn people meshes/models in Gazebo successfully? @staff

Is not a way to access the Gazebo tools interface in Robot ignite academy virtual area? such insert models? Because I think would be easier to copy a models directory to gazebo source and then use it…through icons on Gazebo interface

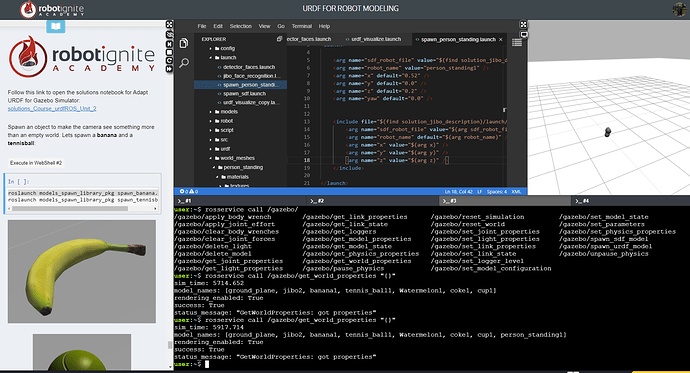

well now I advance a little…I could “pseudo_spawn” the models on Gazebo…with get properties…it is saying it is there…but is not…just my robot appears:

I am really confused in what is going on…

This git is the one we use for person spawn: https://bitbucket.org/theconstructcore/person_sim/src/master/

Its already isntaled in the RIA system so you should be able to spawn the persons insid ethe simulation:

roslaunch person_sim spawn_standing_person.launch

1 Like

Thanks @duckfrost

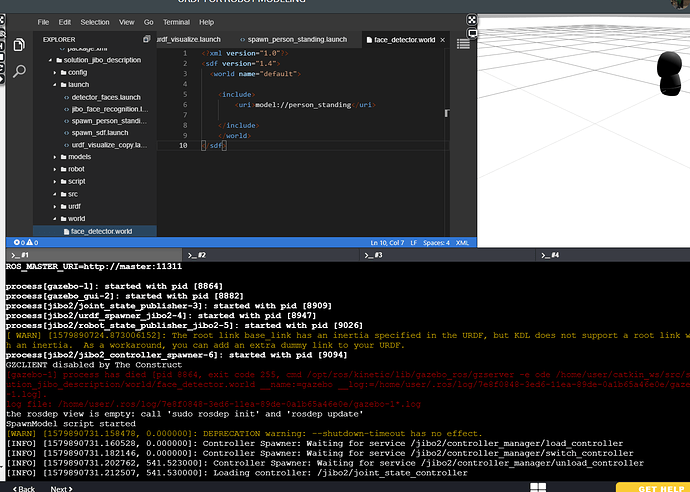

I was trying another way…creating a .world file…but I also was having issues with GzServer…

Do you know why in this way does not work? It is written that Gzserver is disabled by THE CONSTRUCT…

I will try your solution…hope I can

I’m trying the solution now to show you.

Also remember that although RIA is for learning, if you wan to do more developmental stuff, ROSDEvelopement studio is the place to do this, because you have much more control.

Here is how to spawn a person and move it around so you can position it as you want:

roslaunch person_sim init_standing_person.launch

roslaunch person_sim move_person_standing.launch

[https://youtu.be/Sbje5O6ZVCY](http://VIDEO DEMO)

1 Like

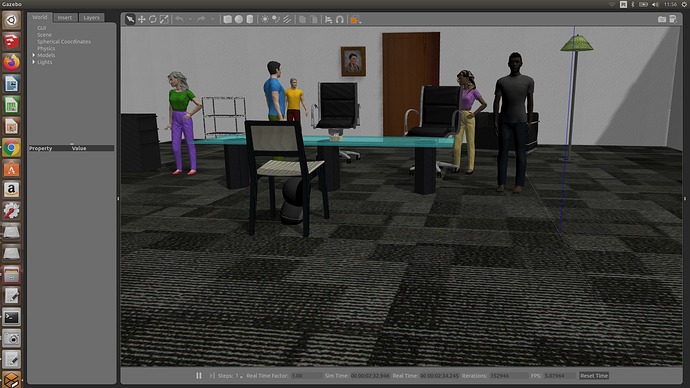

Ok @duckfrost It is really posible to spawn this human model…however it takes a lot of time in virtual environment from Robot Ignite Academy. In this way I used as you recommended the ROS Develpment Studio, which worked good for the example of Live Class 18. But for a robot with controllers, it did not. I had a lot of bugs regarding version,gzserver, the way the urdf model was written (joints), with hardwareInterface, etc…“Deprecated tools” not recognized…so the way was spawning my robot and my personal environment for Jibo in my notebook…So after correct the hardwareInterface in joints, inside my urdf…and set the plugin (camera) with True, and correcting more bugs I could finally spawn my objects (meshes sdf) and my urdf together…

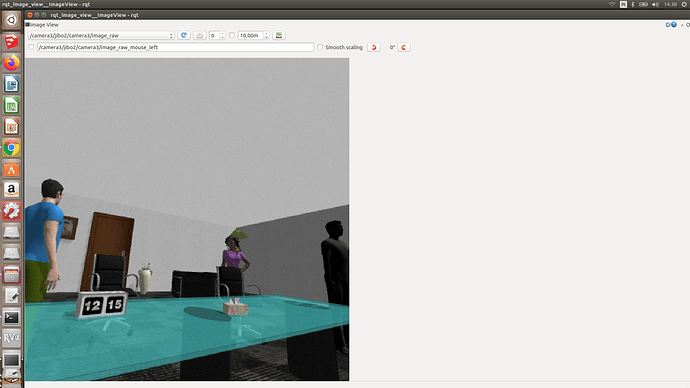

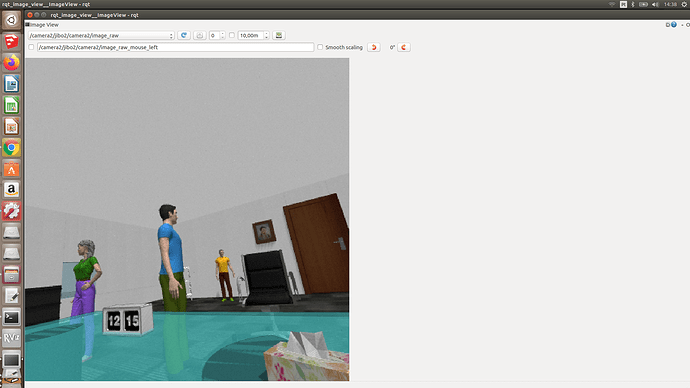

The result is below:

However

@duckfrost as you can see

my robot model stayed under the chair, and not over the chair as I desired (to have a better perspective view of the environment). I tried numerous times, I calculated the Z position properly, etc…but the robot always appear rolled under the table or stable.

What can I do to put it over a object? @staff @bayodesegun

Thanks in advance

New Doubt:

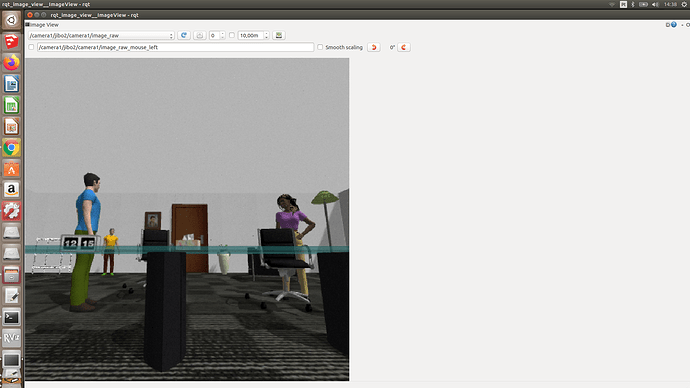

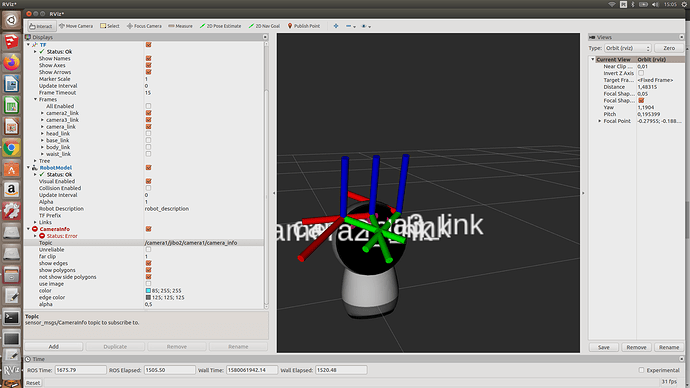

Well after lot of work I have adjusted the position/orientation from my cameras, which I put in Jibo:

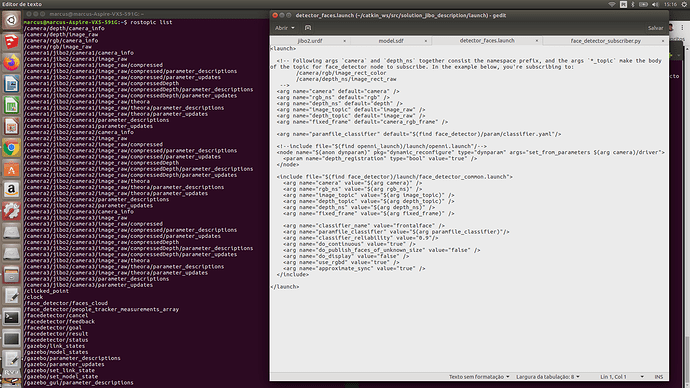

So after that. I tried to run the detector_Faces.launch.

I have studied the adjusting values/args to be set in the launch file, as the required subscriber to face_detector/people_tracker_measurements and face_detector/faces_cloud as it is recommended by us in the site https://answers.ros.org/question/210751/face_detector-not-detecting-with-rgbd-camera/?answer=210752?answer=210752#post-id-210752. But the issue is that I could not identify which topics I need to modify exactly in my launch file through the topics returned by my 3 cameras of Jibo:

Could you assist me please?

@staff

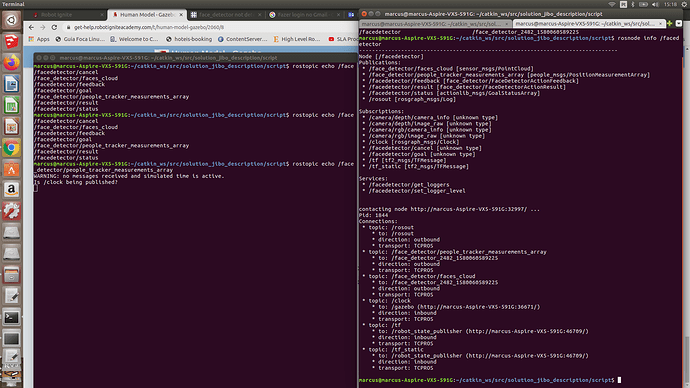

I have this feedback from my system:

I really want to finish this project before it finishes with me!!! hahaha

So

@bayodesegun as you are online I imagine, please could you assist me here? Here is the extension of that issue I commented in the previous post…

Thanks for the support…