Hi @staff experts can you give me one hand please?

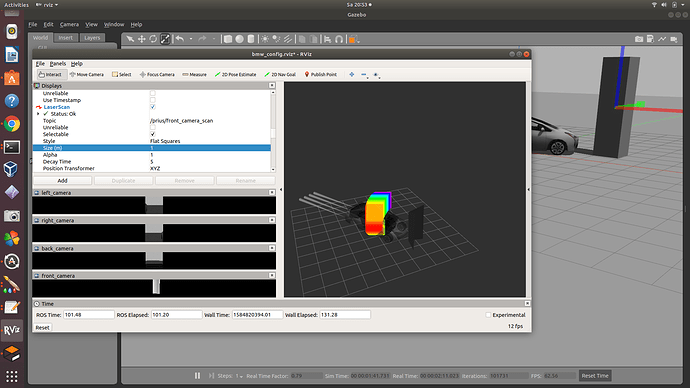

I am trying to use a camera to generate the map for slam because the real car we want to test does not has laser…However when I convert the point cloud to laser scan data from the camera/depth/points the result is that the scan is “born” over my camera frame and represent the object very close to the camera and not in it real position…far from the car, as you can see below:

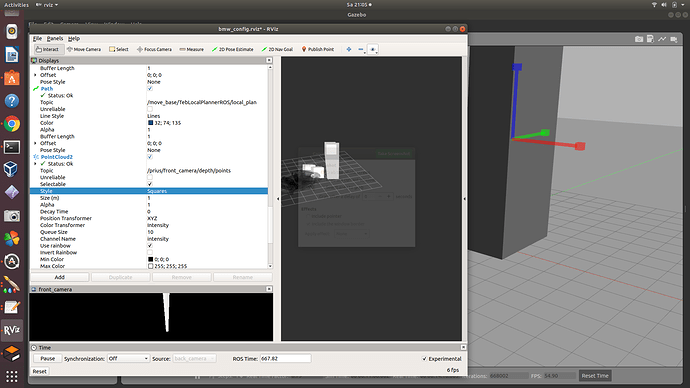

I don’t know what is going on, becuase when I access the point cloud 2 plugin which is used as first data for further 2D laser conversion, returns the right distance of the object from the car, as show the image below

Shoud I add more some parameters when performing this conversion.

I used as tutorial this video from Ricardo

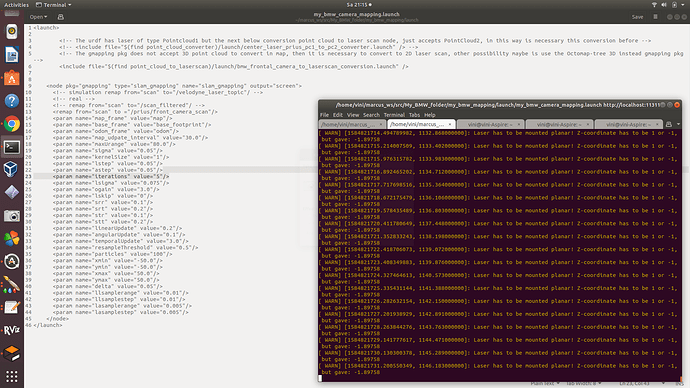

My gmapping node description is below, with the warning information:

The warning says the laser needs to be set planar (I think paralallel to the ground as I have read in some Ros Answers). So should I change the sensor joint spot in order to have it planar (position/orientation in urdf), this solve this issue and the map can be produced?

Even if set this planar issue, I don’t know why the laser scan data is not being generated in the right distance from the car…my files regarding this mapping camera task is below:

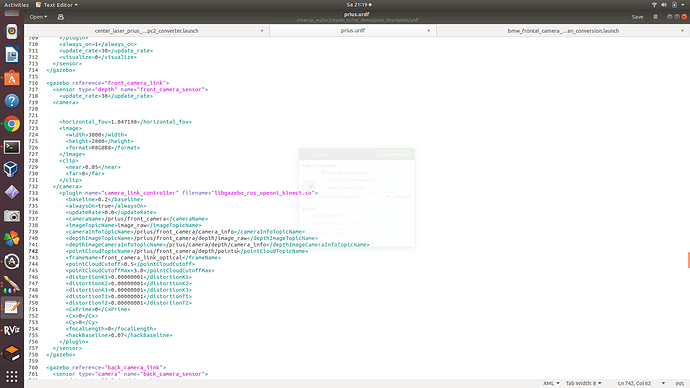

The plugin for the depth camera I used:

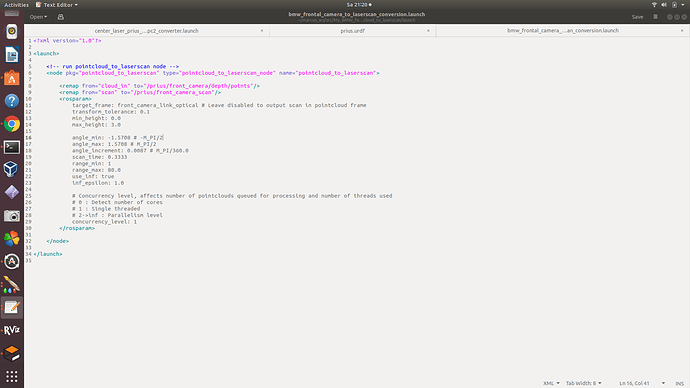

The conversion node I used, based on the video tutorial I shared above

I am not an expert in camera so I tried all day long set and re-set the camera parameters values from the plugin, from the conversion node, from the rviz config plugins…sometimes the image from the camera was displayed better, sometimes not…but this depth camera image was quite worst from the original cameras set for the prius…the problem is that those ones were not depth cameras so I needed to change in order to convert for laser scan data to use the move_base_node/navigationstack/gmapping…

Some advices to fix this camera/laser/position/mapping issue?

Thanks in advance