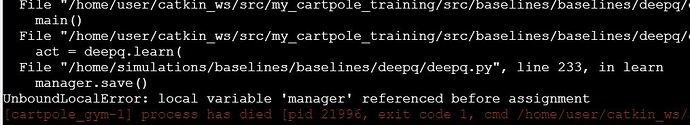

If you launch the file train_cartpole.py it won’t run the local version (I tried everything) you receive this error:

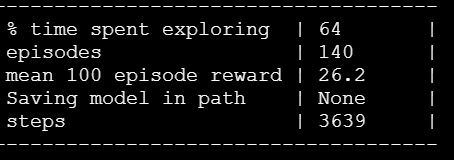

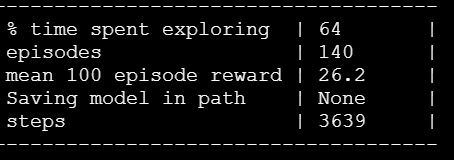

The manager variable is assigned in an if statement at the beginning of the file deepq.py the if statement not being executed but another if statement is and it assumes there’s a trained model that needs to be loaded it’s located at the end of the file and it is executed and uses the variable in a save statement: manager.save this throughs the error. I tried tracking the logic but this file alone is over 200 lines and using vim for this proved too difficult. I changed the logic so it would execute this produced a different error. Finally I just commented the manager.save statement out and it runs, but the output shows a saving model as none:

So when the model is trained it tries to save it which produces this error:

I used a rosrun to run the the downloaded files (they were in the src directory of the package I tried other locations too.) . So either I’m doing something wrong missing a step or there is an error. If anyone has completed this without error please let me know what you did so I can see if I missed a step or something else.

Hello @hskramer ,

You need to run the “ROSified” version of the script, this one:

#!/usr/bin/env python

import gym

from baselines import deepq

from openai_ros.task_envs.cartpole_stay_up import stay_up

import rospy

import rospkg

import os

def callback(lcl, _glb):

# stop training if reward exceeds 199

is_solved = lcl['t'] > 100 and sum(lcl['episode_rewards'][-101:-1]) / 100 >= 199

return is_solved

def main():

rospy.init_node('cartpole_training', anonymous=True, log_level=rospy.WARN)

env = gym.make("CartPoleStayUp-v0")

# get an instance of RosPack with the default search paths

rospack = rospkg.RosPack()

# list all packages, equivalent to rospack list

rospack.list()

# get the file path for rospy_tutorials

path_pkg = rospack.get_path('my_cartpole_training')

model_path = os.path.join(path_pkg, "cartpole_model.pkl")

act = deepq.learn(

env,

network='mlp',

lr=1e-3,

total_timesteps=100000,

buffer_size=50000,

exploration_fraction=0.1,

exploration_final_eps=0.02,

print_freq=10,

checkpoint_freq=10,

callback=callback,

load_path=model_path

)

if __name__ == '__main__':

main()

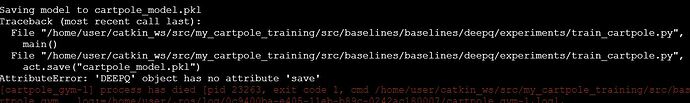

I’ve been doing some tests here and it seems to work properly:

In any case, I’ve noticed that the unit is very confusing. It’s not clear at all what to do at each step, so I’m going to rewrite some things in order to make it easier to follow.

Yes, it’s working now thanks. In my original post where I suggested that the whole Machine Learning path needs a good review, I wrote that before this unit and relative to some other units this one isn’t that bad some are really hard to follow. I wish I hadn’t focused so much on the file in the simulation and tried to fix this file, but I learned something.

Thanks,

Harry