Dear rtellez,

Hope you are doing well.

Sorry for the late response. I had some personal issues and couldn’t spend any time on this project for couple of months.  However, now everyhing is resolved and I’m back!

However, now everyhing is resolved and I’m back!

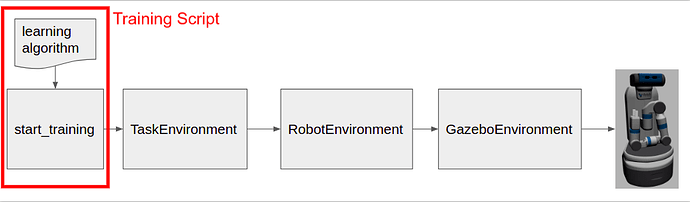

Back to my question. Basically what I am trying to do right now is to as an extension to the course I took from here, I’m working on a personal project to teach a drone to hover using OpenaiRos on gazebo environment, with RotorS simulator: https://github.com/ethz-asl/rotors_simulator (This simulator offers multiple drone models, and also has actuators for the motor control as well – I’m also thinking of doing a test on a real drone after this project for completeness).

For this, I also somehow customized the RobotEnvironment a bit Like this

#! /usr/bin/env python

import numpy

import rospy

import time

from openai_ros import robot_gazebo_env

from std_msgs.msg import Float64

from sensor_msgs.msg import JointState

from sensor_msgs.msg import Imu

from mav_msgs.msg import Actuators

from nav_msgs.msg import Odometry

class HummingbirdHoverEnv(robot_gazebo_env.RobotGazeboEnv):

"""Superclass for all HummingbirdHoverEnv environments.

"""

def __init__(self):

"""

Initializes a new HummingbirdHoverEnv environment.

The Sensors: The sensors accessible are the ones considered useful for AI learning.

Sensor Topic List:

* /hummingbird/ground_truth/imu: IMU of the drone giving acceleration and orientation relative to world.

* /hummingbird/ground_truth/odometry: ODOM

* /hummingbird/motor_speed: Sensing the motor speed

Actuators Topic List:

* /hummingbird/command/motor_speed: Command the motor speed

Args:

"""

rospy.logdebug("Start HummingbirdHoverEnv INIT...")

# Variables that we give through the constructor.

# None in this case

# Internal Vars

# Doesnt have any accessible

self.controllers_list = []

self.robot_name_space = ""

# We launch the init function of the Parent Class robot_gazebo_env.RobotGazeboEnv

super(HummingbirdHoverEnv, self).__init__(controllers_list=self.controllers_list,

robot_name_space=self.robot_name_space,

reset_controls=False,

start_init_physics_parameters=False,

reset_world_or_sim="WORLD")

self.gazebo.unpauseSim()

# self.controllers_object.reset_controllers()

self._check_all_sensors_ready()

# We Start all the ROS related Subscribers and publishers

rospy.Subscriber("/hummingbird/ground_truth/imu", Imu, self._imu_callback)

rospy.Subscriber("/hummingbird/ground_truth/odometry", Odometry, self._odom_callback)

rospy.Subscriber("/hummingbird/motor_speed", Actuators, self._actuators_callback)

self._cmd_motor_speed_pub = rospy.Publisher('/hummingbird/command/motor_speed', Actuators, queue_size=1)

# self._hummingbird_position_pub = rospy.Publisher('/hummingbird/command/motor_speed', JointState, queue_size=1)

self._check_publishers_connection()

self.gazebo.pauseSim()

rospy.logdebug("Finished HummingbirdHoverEnv INIT...")

# Methods needed by the RobotGazeboEnv

# ----------------------------

def _check_all_systems_ready(self):

"""

Checks that all the sensors, publishers and other simulation systems are

operational.

"""

self._check_all_sensors_ready()

return True

# HummingbirdHoverEnv virtual methods

# ----------------------------

def _check_all_sensors_ready(self):

self._check_imu_ready()

self._check_odom_ready()

self._check_actuators_ready()

rospy.logdebug("ALL SENSORS READY")

def _check_imu_ready(self):

self.imu = None

rospy.logdebug("Waiting for //hummingbird/ground_truth/imu to be READY...")

while self.imu is None and not rospy.is_shutdown():

try:

self.imu = rospy.wait_for_message(

"/hummingbird/ground_truth/imu", Imu, timeout=5.0)

rospy.logdebug("Current /hummingbird/ground_truth/imu READY=>")

except:

rospy.logerr(

"Current /hummingbird/ground_truth/imu not ready yet, retrying for getting imu")

return self.imu

def _check_odom_ready(self):

self.odom = None

while self.odom is None and not rospy.is_shutdown():

try:

self.odom = rospy.wait_for_message("/hummingbird/ground_truth/odometry", Odometry, timeout=1.0)

rospy.logdebug("Current /hummingbird/ground_truth/odometry READY=>" + str(self.odom))

except:

rospy.logerr("Current /hummingbird/ground_truth/odometry not ready yet, retrying for getting odom")

return self.odom

def _check_actuators_ready(self):

self.actuators = None

while self.actuators is None and not rospy.is_shutdown():

try:

self.actuators = rospy.wait_for_message("/hummingbird/motor_speed", Actuators, timeout=1.0)

rospy.logdebug("Current /hummingbird/motor_speed READY=>" + str(self.actuators))

except:

rospy.logerr("Current /hummingbird/motor_speed not ready yet, retrying for getting odom")

return self.actuators

def _imu_callback(self, data):

self.imu = data

def _odom_callback(self, data):

self.odom = data

def _actuators_callback(self, data):

self.actuators = data

def _check_publishers_connection(self):

"""

Checks that all the publishers are working

:return:

"""

rate = rospy.Rate(10) # 10hz

while self._cmd_motor_speed_pub.get_num_connections() == 0 and not rospy.is_shutdown():

rospy.logdebug("No subscribers to _cmd_motor_speed_pub yet so we wait and try again")

try:

rate.sleep()

except rospy.ROSInterruptException:

# This is to avoid error when world is rested, time when backwards.

pass

rospy.logdebug("_cmd_motor_speed_pub Publisher Connected")

rospy.logdebug("All Publishers READY")

# Methods that the TaskEnvironment will need to define here as virtual

# because they will be used in RobotGazeboEnv GrandParentClass and defined in the

# TaskEnvironment.

# ----------------------------

def _set_init_pose(self):

"""Sets the Robot in its init pose

"""

raise NotImplementedError()

def _init_env_variables(self):

"""Inits variables needed to be initialised each time we reset at the start

of an episode.

"""

raise NotImplementedError()

def _compute_reward(self, observations, done):

"""Calculates the reward to give based on the observations given.

"""

raise NotImplementedError()

def _set_action(self, action):

"""Applies the given action to the simulation.

"""

raise NotImplementedError()

def _get_obs(self):

raise NotImplementedError()

def _is_done(self, observations):

"""Checks if episode done based on observations given.

"""

raise NotImplementedError()

# Methods that the TaskEnvironment will need.

# ----------------------------

def move_motor(self, motor_speed):

motor_speed_value = Actuators()

motor_speed_value.angular_velocities = motor_speed

rospy.logdebug("Motor Velocity>>" + str(motor_speed_value))

self._cmd_motor_speed_pub.publish(motor_speed_value)

# self.wait_until_motor_is_in_vel(motor_speed_value.angular_velocities)

# def init_hummingbird_pose(self, init_pose):

# init_pose_value = JointState()

# init_pose_value.position = init_pose

# rospy.logdebug("Init pose>>" + str(init_pose_value))

# self._hummingbird_position_pub.publish(init_pose_value)

def get_imu(self):

return self.imu

def get_odom(self):

return self.odom

def get_actuators(self):

return self.actuators

def reinit_sensors(self):

"""

This method is for the tasks so that when reseting the episode

the sensors values are forced to be updated with the real data and

"""

And my Task environment goes like this (My initial goal was to make this all work, so I did not change parameters, or did reward shaping yet)

#! /usr/bin/env python

import rospy

import numpy

from gym import spaces

import hummingbird_hover_env

from gym.envs.registration import register

from geometry_msgs.msg import Point

from geometry_msgs.msg import Vector3

from std_msgs.msg import Float64

from tf.transformations import euler_from_quaternion

from openai_ros.task_envs.task_commons import LoadYamlFileParamsTest

max_episode_steps = 10000 # Can be any Value

register(

id='HummingbirdHoverTaskEnvA2C-v0',

entry_point='hummingbird_hover_task_env_a2c:HummingbirdHoverTaskEnv',

max_episode_steps=max_episode_steps,

) ## CHECK NAMING HERE

class HummingbirdHoverTaskEnv(hummingbird_hover_env.HummingbirdHoverEnv):

def __init__(self):

"""

Make hummingbird learn how to hover (Get to a point)

"""

# Load Params from the desired Yaml file

LoadYamlFileParamsTest(rospackage_name="hummingbird_pkg",

rel_path_from_package_to_file="config",

yaml_file_name="hummingbird_a2c_params.yaml") ### Need change

# Only variable needed to be set here

self.num_envs = rospy.get_param('/hummingbird/num_envs')

self.n_actions = rospy.get_param('/hummingbird/n_actions')

self.rpm_max = rospy.get_param("/hummingbird/action/rpm_max")

self.rpm_min = rospy.get_param("/hummingbird/action/rpm_min")

a_high = numpy.array([self.rpm_max, #r1

self.rpm_max, #r2

self.rpm_max, #r3

self.rpm_max, #r4

])

a_low = numpy.array([self.rpm_min, #r1

self.rpm_min, #r2

self.rpm_min, #r3

self.rpm_min, #r4

])

self.action_space = spaces.Box(a_low, a_high)

# self.action_space = spaces.Discrete(self.n_actions)

# We set the reward range, which is not compulsory but here we do it.

self.reward_range = (-numpy.inf, numpy.inf)

# Actions and Observations

self.init_motor_speed = rospy.get_param(

'/hummingbird/init_motor_speed')

# self.step_motor_speed = rospy.get_param(

# '/hummingbird/step_motor_speed')

# self.init_linear_speed_vector = Vector3()

# self.init_linear_speed_vector.x = rospy.get_param(

# '/hummingbird/init_linear_speed_vector/x')

# self.init_linear_speed_vector.y = rospy.get_param(

# '/hummingbird/init_linear_speed_vector/y')

# self.init_linear_speed_vector.z = rospy.get_param(

# '/hummingbird/init_linear_speed_vector/z')

#

# self.init_angular_turn_speed = rospy.get_param(

# '/hummingbird/init_angular_turn_speed')

self.running_step = rospy.get_param(

'/hummingbird/running_step')

#self.init_point = Float64()

#self.init_point.data = rospy.get_param("/hummingbird/init_position")

# Get WorkSpace Cube Dimensions

self.work_space_x_max = rospy.get_param("/hummingbird/work_space/x_max")

self.work_space_x_min = rospy.get_param("/hummingbird/work_space/x_min")

self.work_space_y_max = rospy.get_param("/hummingbird/work_space/y_max")

self.work_space_y_min = rospy.get_param("/hummingbird/work_space/y_min")

self.work_space_z_max = rospy.get_param("/hummingbird/work_space/z_max")

self.work_space_z_min = rospy.get_param("/hummingbird/work_space/z_min")

# Maximum RPY values

self.max_roll = rospy.get_param("/hummingbird/max_roll")

self.max_pitch = rospy.get_param("/hummingbird/max_pitch")

self.max_yaw = rospy.get_param("/hummingbird/max_yaw")

# Maximum linear v,a and angular w and a -- just define range

# Get Desired Point to Get

self.desired_point = Point()

self.desired_point.x = rospy.get_param("/hummingbird/desired_pose/x")

self.desired_point.y = rospy.get_param("/hummingbird/desired_pose/y")

self.desired_point.z = rospy.get_param("/hummingbird/desired_pose/z")

self.desired_point_epsilon = rospy.get_param("/hummingbird/desired_point_epsilon")

# We place the Maximum and minimum values of the X,Y,Z,R,P,Yof the pose

high = numpy.array([self.work_space_x_max, #pos.x

self.work_space_y_max, #pos.y

self.work_space_z_max, #pos.z

numpy.inf, #vel.x

numpy.inf, #vel.y

numpy.inf, #vel.z

numpy.inf, #acc.x

numpy.inf, #acc.y

numpy.inf, #acc.z

self.max_roll, #att.x

self.max_pitch, #att.y

self.max_yaw, #att.z

numpy.inf, #ang_vel.x

numpy.inf, #ang_vel.y

numpy.inf #ang_vel.z

])

# numpy.inf, #ang_acc.x

# numpy.inf, #ang_acc.y

# numpy.inf, #ang_acc.z

low = numpy.array([self.work_space_x_min, #pos.x

self.work_space_y_min, #pos.y

self.work_space_z_min, #pos.z

-numpy.inf, #vel.x

-numpy.inf, #vel.y

-numpy.inf, #vel.z

-numpy.inf, #acc.x

-numpy.inf, #acc.y

-numpy.inf, #acc.z

-1*self.max_roll, #att.x

-1*self.max_pitch, #att.y

-1*self.max_yaw, #att.z

-numpy.inf, #ang_vel.x

-numpy.inf, #ang_vel.y

-numpy.inf #ang_vel.z

])

# -numpy.inf, #ang_acc.x

# -numpy.inf, #ang_acc.y

# -numpy.inf, #ang_acc.z

self.observation_space = spaces.Box(low, high)

rospy.logdebug("ACTION SPACES TYPE===>" + str(self.action_space))

rospy.logdebug("OBSERVATION SPACES TYPE===>" + str(self.observation_space))

# Rewards (needs shaping)

self.closer_to_point_reward = rospy.get_param("/hummingbird/closer_to_point_reward")

self.not_ending_point_reward = rospy.get_param("/hummingbird/not_ending_point_reward")

self.end_episode_points = rospy.get_param("/hummingbird/end_episode_points")

self.cumulated_steps = 0.0

# Here we will add any init functions prior to starting the MyRobotEnv

super(HummingbirdHoverTaskEnv, self).__init__() #ros_ws_abspath

def _set_init_pose(self):

"""

Sets the Robot in its init motor speed

and lands the robot. Its preparing it to be reseted in the world.

"""

#raw_input("INIT SPEED PRESS")

init_motor_input = [self.init_motor_speed, self.init_motor_speed, self.init_motor_speed, self.init_motor_speed]

self.move_motor(init_motor_input)

# rospy.sleep(1.0) # wait for some time

# We Issue the landing command to be sure it starts landing

# raw_input("LAND PRESS")

# self.land()

return True

def _init_env_variables(self):

"""

Inits variables needed to be initialised each time we reset at the start

of an episode.

:return:

"""

#raw_input("TakeOFF PRESS")

# We TakeOff before sending any movement commands

# self.takeoff()

self.motor_speed = self.init_motor_speed

# For Info Purposes

self.cumulated_reward = 0.0

# We get the initial pose to measure the distance from the desired point.

gt_pose = self.get_base_pose()

self.previous_distance_from_des_point = self.get_distance_from_desired_point(gt_pose.position)

def _set_action(self, action):

"""

This set action will Set the motor speed of the hummingbird

:param action: The action that set s what movement to do next.

"""

rospy.logdebug("Start Set Action ==>"+str(action))

# We convert the actions to speed movements to send to the parent class of Hummingbird

# if action == 0: # Speed up

# self.motor_speed += self.step_motor_speed

# # self.last_action = "FORWARDS"

# elif action == 1: # Speed down

# self.motor_speed -= self.step_motor_speed

# # self.last_action = "BACKWARDS"

# We tell hummingbird the motor speed to set to execute

# print(action[0])

motor_input = 500 * action[0]

self.move_motor(motor_input)

rospy.sleep(self.running_step) # wait for some time

rospy.logdebug("END Set Action ==>"+str(action))

def _get_obs(self):

"""

Here we define what sensor data defines our robots observations

To know which Variables we have access to, we need to read the

droneEnv API DOCS

:return:

"""

rospy.logdebug("Start Get Observation ==>")

# We get the laser scan data

gt_pose = self.get_base_pose()

gt_linear_vel = self.get_base_linear_vel()

gt_angular_vel = self.get_base_angular_vel()

gt_linear_acc = self.get_base_linear_acc()

#gt_angular_acc = self.get_base_angular_acc()

# We get the orientation of the cube in RPY

roll, pitch, yaw = self.get_orientation_euler(gt_pose.orientation)

"""

observations = [ round(gt_pose.position.x, 1),

round(gt_pose.position.y, 1),

round(gt_pose.position.z, 1),

round(roll, 1),

round(pitch, 1),

round(yaw, 1),

round(sonar_value,1)]

"""

# We simplify a bit the spatial grid to make learning faster

observations = [round(gt_pose.position.x, 1),

round(gt_pose.position.y, 1),

round(gt_pose.position.z, 1),

round(gt_linear_vel.x, 1),

round(gt_linear_vel.y, 1),

round(gt_linear_vel.z, 1),

round(gt_linear_acc.x, 1),

round(gt_linear_acc.y, 1),

round(gt_linear_acc.z, 1),

round(roll, 1),

round(pitch, 1),

round(yaw, 1),

round(gt_angular_vel.x, 1),

round(gt_angular_vel.y, 1),

round(gt_angular_vel.z, 1),

# round(gt_angular_acc.x, 1),

# round(gt_angular_acc.y, 1),

# round(gt_angular_acc.z, 1),

]

rospy.logdebug("Observations==>"+str(observations))

rospy.logdebug("END Get Observation ==>")

# print(observations)

return observations

def _is_done(self, observations):

"""

The done can be done due to three reasons:

1) It went outside the workspace

2) It flipped due to a crash or something

3) It has reached the desired point

"""

episode_done = False

current_position = Point()

current_position.x = observations[0]

current_position.y = observations[1]

current_position.z = observations[2]

current_orientation = Point()

current_orientation.x = observations[9]

current_orientation.y = observations[10]

current_orientation.z = observations[11]

is_inside_workspace_now = self.is_inside_workspace(current_position)

# sonar_detected_something_too_close_now = self.sonar_detected_something_too_close(

# sonar_value)

drone_flipped = self.drone_has_flipped(current_orientation)

has_reached_des_point = self.is_in_desired_position(

current_position, self.desired_point_epsilon)

rospy.logwarn(">>>>>> DONE RESULTS <<<<<")

if not is_inside_workspace_now:

rospy.logerr("is_inside_workspace_now=" +

str(is_inside_workspace_now))

else:

rospy.logwarn("is_inside_workspace_now=" +

str(is_inside_workspace_now))

if drone_flipped:

rospy.logerr("drone_flipped="+str(drone_flipped))

else:

rospy.logwarn("drone_flipped="+str(drone_flipped))

if has_reached_des_point:

rospy.logerr("has_reached_des_point="+str(has_reached_des_point))

else:

rospy.logwarn("has_reached_des_point="+str(has_reached_des_point))

# We see if we are outside the Learning Space

episode_done = not(

is_inside_workspace_now) or drone_flipped or has_reached_des_point

if episode_done:

rospy.logerr("episode_done====>"+str(episode_done))

else:

rospy.logwarn("episode_done====>"+str(episode_done))

return episode_done

def _compute_reward(self, observations, done):

current_position = Point()

current_position.x = observations[0]

current_position.y = observations[1]

current_position.z = observations[2]

distance_from_des_point = self.get_distance_from_desired_point(

current_position)

distance_difference = distance_from_des_point - \

self.previous_distance_from_des_point

if not done:

# If there has been a decrease in the distance to the desired point, we reward it

if distance_difference < 0.0:

rospy.logwarn("DECREASE IN DISTANCE GOOD")

reward = self.closer_to_point_reward

else:

rospy.logerr("INCREASE IN DISTANCE BAD")

reward = 0

else:

if self.is_in_desired_position(current_position, epsilon=0.5):

reward = self.end_episode_points

else:

reward = -1*self.end_episode_points

self.previous_distance_from_des_point = distance_from_des_point

rospy.logdebug("reward=" + str(reward))

self.cumulated_reward += reward

rospy.logdebug("Cumulated_reward=" + str(self.cumulated_reward))

self.cumulated_steps += 1

rospy.logdebug("Cumulated_steps=" + str(self.cumulated_steps))

return reward

# Internal TaskEnv Methods

def is_in_desired_position(self, current_position, epsilon=0.05):

"""

It return True if the current position is similar to the desired poistion

"""

is_in_desired_pos = False

x_pos_plus = self.desired_point.x + epsilon

x_pos_minus = self.desired_point.x - epsilon

y_pos_plus = self.desired_point.y + epsilon

y_pos_minus = self.desired_point.y - epsilon

z_pos_plus = self.desired_point.z + epsilon

z_pos_minus = self.desired_point.z - epsilon

x_current = current_position.x

y_current = current_position.y

z_current = current_position.z

x_pos_are_close = (x_current <= x_pos_plus) and (

x_current > x_pos_minus)

y_pos_are_close = (y_current <= y_pos_plus) and (

y_current > y_pos_minus)

z_pos_are_close = (z_current <= z_pos_plus) and (

z_current > z_pos_minus)

is_in_desired_pos = x_pos_are_close and y_pos_are_close and z_pos_are_close

rospy.logwarn("###### IS DESIRED POS ? ######")

rospy.logwarn("current_position"+str(current_position))

rospy.logwarn("x_pos_plus"+str(x_pos_plus) +

",x_pos_minus="+str(x_pos_minus))

rospy.logwarn("y_pos_plus"+str(y_pos_plus) +

",y_pos_minus="+str(y_pos_minus))

rospy.logwarn("z_pos_plus"+str(z_pos_plus) +

",z_pos_minus="+str(z_pos_minus))

rospy.logwarn("x_pos_are_close"+str(x_pos_are_close))

rospy.logwarn("y_pos_are_close"+str(y_pos_are_close))

rospy.logwarn("z_pos_are_close"+str(z_pos_are_close))

rospy.logwarn("is_in_desired_pos"+str(is_in_desired_pos))

rospy.logwarn("############")

return is_in_desired_pos

def is_inside_workspace(self, current_position):

"""

Check if the Drone is inside the Workspace defined

"""

is_inside = False

rospy.logwarn("##### INSIDE WORK SPACE? #######")

rospy.logwarn("XYZ current_position"+str(current_position))

rospy.logwarn("work_space_x_max"+str(self.work_space_x_max) +

",work_space_x_min="+str(self.work_space_x_min))

rospy.logwarn("work_space_y_max"+str(self.work_space_y_max) +

",work_space_y_min="+str(self.work_space_y_min))

rospy.logwarn("work_space_z_max"+str(self.work_space_z_max) +

",work_space_z_min="+str(self.work_space_z_min))

rospy.logwarn("############")

if current_position.x > self.work_space_x_min and current_position.x <= self.work_space_x_max:

if current_position.y > self.work_space_y_min and current_position.y <= self.work_space_y_max:

if current_position.z > self.work_space_z_min and current_position.z <= self.work_space_z_max:

is_inside = True

return is_inside

def drone_has_flipped(self, current_orientation):

"""

Based on the orientation RPY given states if the drone has flipped

"""

has_flipped = True

self.max_roll = rospy.get_param("/hummingbird/max_roll")

self.max_pitch = rospy.get_param("/hummingbird/max_pitch")

rospy.logwarn("#### HAS FLIPPED? ########")

rospy.logwarn("RPY current_orientation"+str(current_orientation))

rospy.logwarn("max_roll"+str(self.max_roll) +

",min_roll="+str(-1*self.max_roll))

rospy.logwarn("max_pitch"+str(self.max_pitch) +

",min_pitch="+str(-1*self.max_pitch))

rospy.logwarn("############")

if current_orientation.x > -1*self.max_roll and current_orientation.x <= self.max_roll:

if current_orientation.y > -1*self.max_pitch and current_orientation.y <= self.max_pitch:

has_flipped = False

return has_flipped

def get_base_pose(self):

odom = self.get_odom()

base_pose = odom.pose.pose

return base_pose

def get_base_linear_vel(self):

odom = self.get_odom()

base_linear_vel = odom.twist.twist.linear

return base_linear_vel

def get_base_angular_vel(self):

odom = self.get_odom()

base_angular_vel = odom.twist.twist.angular

return base_angular_vel

def get_base_rpy(self):

imu = self.get_imu()

base_orientation = imu.orientation

euler_rpy = Vector3()

euler = euler_from_quaternion([ base_orientation.x,

base_orientation.y,

base_orientation.z,

base_orientation.w]

)

euler_rpy.x = euler[0]

euler_rpy.y = euler[1]

euler_rpy.z = euler[2]

return euler_rpy

def get_base_linear_acc(self):

imu = self.get_imu()

base_linear_acc = imu.linear_acceleration

return base_linear_acc

def get_distance_from_desired_point(self, current_position):

"""

Calculates the distance from the current position to the desired point

:param start_point:

:return:

"""

distance = self.get_distance_from_point(current_position,

self.desired_point)

return distance

def get_distance_from_point(self, pstart, p_end):

"""

Given a Vector3 Object, get distance from current position

:param p_end:

:return:

"""

a = numpy.array((pstart.x, pstart.y, pstart.z))

b = numpy.array((p_end.x, p_end.y, p_end.z))

distance = numpy.linalg.norm(a - b)

return distance

def get_orientation_euler(self, quaternion_vector):

# We convert from quaternions to euler

orientation_list = [quaternion_vector.x,

quaternion_vector.y,

quaternion_vector.z,

quaternion_vector.w]

roll, pitch, yaw = euler_from_quaternion(orientation_list)

return roll, pitch, yaw

And the learning part is basically the same as mentioned above, which just calls the learning scripts from the baselines and basically leveraging it.

Strange thing here is that when I tried the learning with TRPO, it worked (meaning that the it automatically resets the env after the episode, but i have no idea why this is not the case for algorithms like PPO and A2C.

For the learning part, I’m using tf2.

I’m sorry that I cannot share the whole rosject with you, my goal was to try to run everything on my local machine, but if you need more information i will try to share the whole thing by pushing this to the git or sth.

Thank you very much for your time, and looking forward to your answer.

Sincerely,

Taehyoung Kim

However, now everyhing is resolved and I’m back!

However, now everyhing is resolved and I’m back!